Bayes' theorem

From Wikipedia, the free encyclopedia

Bayes' theorem (also known as Bayes' rule or Bayes' law) is a result in probability theory, which relates the conditional and marginal probability distributions of random variables. In some interpretations of probability, Bayes' theorem tells how to update or revise beliefs in light of new evidence a posteriori.

The probability of an event A conditional on another event B is generally different from the probability of B conditional on A. However, there is a definite relationship between the two, and Bayes' theorem is the statement of that relationship.

As a formal theorem, Bayes' theorem is valid in all interpretations of probability. However, frequentist and Bayesian interpretations disagree about the kinds of things to which probabilities should be assigned in applications: frequentists assign probabilities to random events according to their frequencies of occurrence or to subsets of populations as proportions of the whole; Bayesians assign probabilities to propositions that are uncertain. A consequence is that Bayesians have more frequent occasion to use Bayes' theorem. The articles on Bayesian probability and frequentist probability discuss these debates at greater length.

Contents[hide] |

[edit] Statement of Bayes' theorem

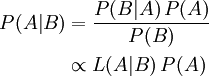

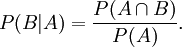

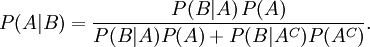

Bayes' theorem relates the conditional and marginal probabilities of stochastic events A and B:

where L(A|B) is the likelihood of A given fixed B. Although in this case the relationship P(B | A) = L(A | B), in other cases likelihood L can be multiplied by a constant factor, so that it is proportional to, but does not equal probability P.

Each term in Bayes' theorem has a conventional name:

- P(A) is the prior probability or marginal probability of A. It is "prior" in the sense that it does not take into account any information about B.

- P(A|B) is the conditional probability of A, given B. It is also called the posterior probability because it is derived from or depends upon the specified value of B.

- P(B|A) is the conditional probability of B given A.

- P(B) is the prior or marginal probability of B, and acts as a normalizing constant.

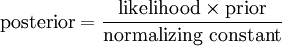

With this terminology, the theorem may be paraphrased as

In words: the posterior probability is proportional to the product of the prior probability and the likelihood.

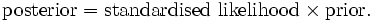

In addition, the ratio P(B|A)/P(B) is sometimes called the standardised likelihood, so the theorem may also be paraphrased as

[edit] Derivation from conditional probabilities

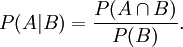

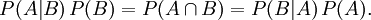

To derive the theorem, we start from the definition of conditional probability. The probability of event A given event B is

Likewise, the probability of event B given event A is

Rearranging and combining these two equations, we find

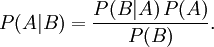

This lemma is sometimes called the product rule for probabilities. Dividing both sides by Pr(B), providing that it is non-zero, we obtain Bayes' theorem:

[edit] Alternative forms of Bayes' theorem

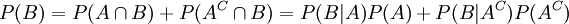

Bayes' theorem is often embellished by noting that

where AC is the complementary event of A (often called "not A"). So the theorem can be restated as

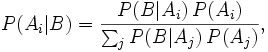

More generally, where {Ai} forms a partition of the event space,

for any Ai in the partition.

See also the law of total probability.

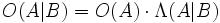

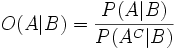

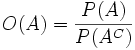

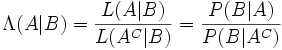

[edit] Bayes' theorem in terms of odds and likelihood ratio

Bayes' theorem can also be written neatly in terms of a likelihood ratio Λ and odds O as

where  are the odds of A given B,

are the odds of A given B,

and  are the odds of A by itself,

are the odds of A by itself,

while  is the likelihood ratio.

is the likelihood ratio.

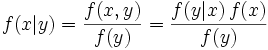

[edit] Bayes' theorem for probability densities

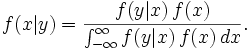

There is also a version of Bayes' theorem for continuous distributions. It is somewhat harder to derive, since probability densities, strictly speaking, are not probabilities, so Bayes' theorem has to be established by a limit process; see Papoulis (citation below), Section 7.3 for an elementary derivation. Bayes's theorem for probability densities is formally similar to the theorem for probabilities:

and there is an analogous statement of the law of total probability:

As in the discrete case, the terms have standard names. f(x, y) is the joint distribution of X and Y, f(x|y) is the posterior distribution of X given Y=y, f(y|x) = L(x|y) is (as a function of x) the likelihood function of X given Y=y, and f(x) and f(y) are the marginal distributions of X and Y respectively, with f(x) being the prior distribution of X.

Here we have indulged in a conventional abuse of notation, using f for each one of these terms, although each one is really a different function; the functions are distinguished by the names of their arguments.

[edit] Abstract Bayes' theorem

Given two absolutely continuous probability measures P˜Q on the probability space  and a sigma-algebra

and a sigma-algebra  , the abstract Bayes theorem for a

, the abstract Bayes theorem for a  -measurable random variable X becomes

-measurable random variable X becomes

![E_P[X|\mathcal{G}] = \frac{E_Q[\frac{dP}{dQ} X |\mathcal{G}]}{E_Q[\frac{dP}{dQ}|\mathcal{G}]}](Bayes%27_theorem%20Files/bcb58d4f262347072d35dea4a65977dd.png) .

.

This formulation is used in Kalman filtering to find Zakai equations. It is also used in financial mathematics for change of numeraire techniques.

[edit] Extensions of Bayes' theorem

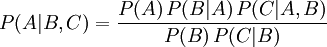

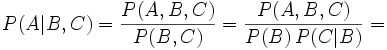

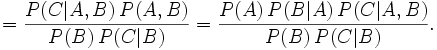

Theorems analogous to Bayes' theorem hold in problems with more than two variables. For example:

This can be derived in several steps from Bayes' theorem and the definition of conditional probability:

A general strategy is to work with a decomposition of the joint probability, and to marginalize (integrate) over the variables that are not of interest. Depending on the form of the decomposition, it may be possible to prove that some integrals must be 1, and thus they fall out of the decomposition; exploiting this property can reduce the computations very substantially. A Bayesian network, for example, specifies a factorization of a joint distribution of several variables in which the conditional probability of any one variable given the remaining ones takes a particularly simple form (see Markov blanket).

[edit] Stochastic Hoody-hoo

[edit] Blahbitty Bloohbitty Blee

Suppose there are blah blah blitty blah. Yada yada, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. Yada yada, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. Yada yada, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee.

[edit] You are getting very sleepy...

Then you can yada yada bloopy blah. Yada yada, yada yada bleepity Blee, yada blee blah yaditty yada ya. Blahbloopy yada yada blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, yada yada yaddity yada ya, yada yada blahbloopy yada yada bloohbloopy blee. Yada yada, yada yada bleepity Blee, yada blee blah yaditty yada ya. Blahbloopy yada yada blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, yada yada yaddity yada ya, yada yada blahbloopy yada yada bloohbloopy blee. Yada yada, yada yada bleepity Blee, yada blee blah yaditty yada ya. Blahbloopy yada yada blech blah, blechitty blah yada yada ying yang yong. Foo bar baz, yada yada yaddity yada ya, yada yada blahbloopy yada yada bloohbloopy blee.

[edit] Verrrrry Sleeeeeepy.......

We find that blah blah blitty blah. blah blah, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. blah blah, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. blah blah, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. blah blah, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee. blah blah, blah blah bleepity Blee, yada blee blah yaditty yada ya. Blahbitty blah blah blech blah, blechitty blah blah blah ying yang yong. Foo bar baz, blah blah yaddity yada ya, blah blah blahbitty blah blah bloohbitty blee.

[edit] Your eyelids are sooooo heavy.......

Blah blah blah

Yada yada yada

Blah blah

Yada yada

Blah

Yada

blah

yada

blah

blah

blah

blah

blah

blah

Zzzzz.............

[edit] See also

[edit] References

[edit] Versions of the essay

- Thomas Bayes (1763), "An Essay towards solving a Problem in the Doctrine of Chances. By the late Rev. Mr. Bayes, F. R. S. communicated by Mr. Price, in a letter to John Canton, A. M. F. R. S.", Philosophical Transactions, Giving Some Account of the Present Undertakings, Studies and Labours of the Ingenious in Many Considerable Parts of the World 53:370–418.

- Thomas Bayes (1763/1958) "Studies in the History of Probability and Statistics: IX. Thomas Bayes' Essay Towards Solving a Problem in the Doctrine of Chances", Biometrika 45:296–315. (Bayes' essay in modernized notation)

- Thomas Bayes "An essay towards solving a Problem in the Doctrine of Chances". (Bayes' essay in the original notation)

[edit] Commentaries

- G. A. Barnard (1958) "Studies in the History of Probability and Statistics: IX. Thomas Bayes' Essay Towards Solving a Problem in the Doctrine of Chances", Biometrika 45:293–295. (biographical remarks)

- Daniel Covarrubias. "An Essay Towards Solving a Problem in the Doctrine of Chances". (an outline and exposition of Bayes' essay)

- Stephen M. Stigler (1982). "Thomas Bayes' Bayesian Inference," Journal of the Royal Statistical Society, Series A, 145:250–258. (Stigler argues for a revised interpretation of the essay; recommended)

- Isaac Todhunter (1865). A History of the Mathematical Theory of Probability from the time of Pascal to that of Laplace, Macmillan. Reprinted 1949, 1956 by Chelsea and 2001 by Thoemmes.

[edit] Additional material

- Pierre-Simon Laplace (1774). "Mémoire sur la Probabilité des Causes par les Événements", Savants Étranges 6:621–656; also Œuvres 8:27–65.

- Pierre-Simon Laplace (1774/1986). "Memoir on the Probability of the Causes of Events", Statistical Science 1(3):364–378.

- Stephen M. Stigler (1986). "Laplace's 1774 memoir on inverse probability", Statistical Science 1(3):359–378.

- Stephen M. Stigler (1983). "Who Discovered Bayes' Theorem?" The American Statistician 37(4):290–296.

- Jeff Miller et al. Earliest Known Uses of Some of the Words of Mathematics (B). (very informative; recommended)

- Athanasios Papoulis (1984). Probability, Random Variables, and Stochastic Processes, second edition. New York: McGraw-Hill.

- James Joyce (2003). "Bayes' Theorem", Stanford Encyclopedia of Philosophy.

- The on-line textbook: Information Theory, Inference, and Learning Algorithms, by David J.C. MacKay provides an up to date overview of the use of Bayes' theorem in information theory and machine learning.

- Stanford Encyclopedia of Philosophy: Bayes' Theorem provides a comprehensive introduction to Bayes' theorem.

- Eric W. Weisstein, Bayes' Theorem at MathWorld.

- Bayes' theorem at PlanetMath.

- Yudkowsky, Eliezer S. (2003), "An Intuitive Explanation of Bayesian Reasoning"